pyRDDLGym

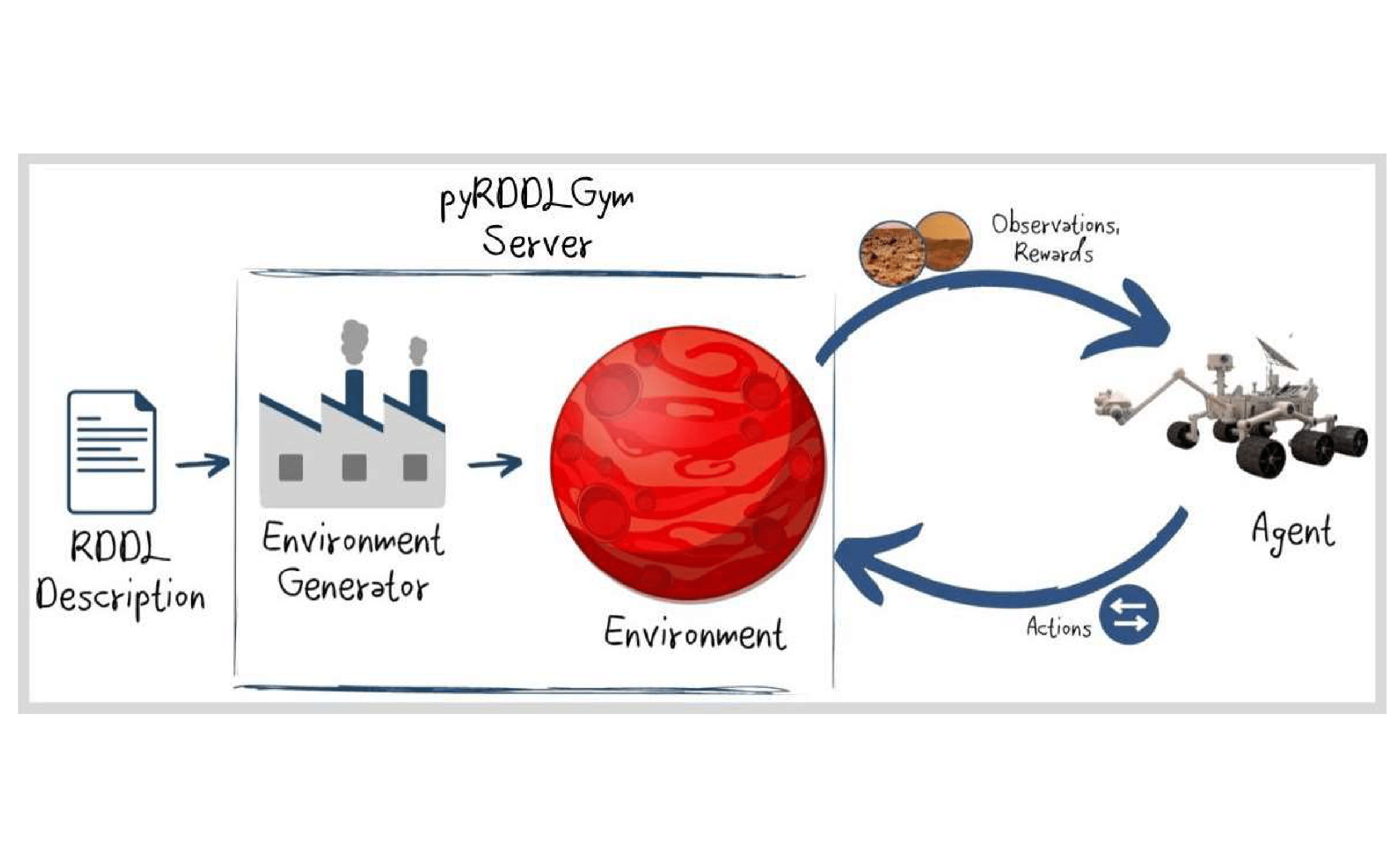

The pyRDDLGym package is a Python toolkit to automatically generate OpenAI Gym environments from Relational Dynamic Influence Diagram Language (RDDL) description files. It serves as the official parser, simulator, and evaluation system for RDDL in Python, making it easier to create and work with dynamic stochastic environments in any pipeline where an OpenAI gym environment is required. pyRDDLGym includes customizable visualization and recording tools for debugging and interpreting plans, and supports a growing ecosystem of planning tools that aims to fill the niche on standardized benchmarking and baseline problems for the planning community.

Purpose

Relational dynamic description language (RDDL) is a compact, easily modifiable representation language for discrete time control in dynamic stochastic environments (web-based intro), (full tutorial), (language spec). One of its core benefits is object-oriented relational (template) specification, which allows easy scaling of model instances from 1 object to 1000s of objects without changing the domain model (e.g., Wildfire (Web Tutorial)).

The purpose of pyRDDLGym is to provide automatic translation of RDDL planning domain description files to standard OpenAI gym environments. This means you inherit the benefits of a structured planning description within your existing reinforcement learning or planning framework based on OpenAI gym. It also provides customizable visualization and recording tools to facilitate domain debugging and plan interpretation (Taitler et al., 2023).

pyRDDLGym was the official evaluation system in the 2023 International Planning Competition (Taitler et al., 2024)

Examples

Translation of RDDL domain and instance files to an OpenAI gym environment is really straightforward:

import pyRDDLGym

env = pyRDDLGym.make(domain="/path/to/domain.rddl", instance="/path/to/instance.rddl")

A large number of built-in RDDL domains and instances (such as standard OpenAI gym domains) are provided in rddlrepository:

import pyRDDLGym

env = pyRDDLGym.make(domain="Cartpole_Continuous_gym", instance="0")

pyRDDLGym facilitates interaction with an environment using a policy, e.g. a random exploration policy:

from pyRDDLGym.core.policy import RandomAgent

agent = RandomAgent(action_space=env.action_space, num_actions=env.max_allowed_actions)

total_reward = agent.evaluate(env, episodes=1, render=True)['mean']